"The pillars of this project are fidelity, scholarship and delight. To ensure this balance, Getty assembled a cross-functional project team spanning technologists, academics and creatives."Getty

Q: Tell us about your initial moodboard, wireframe, or prototype. How did things change throughout the process?

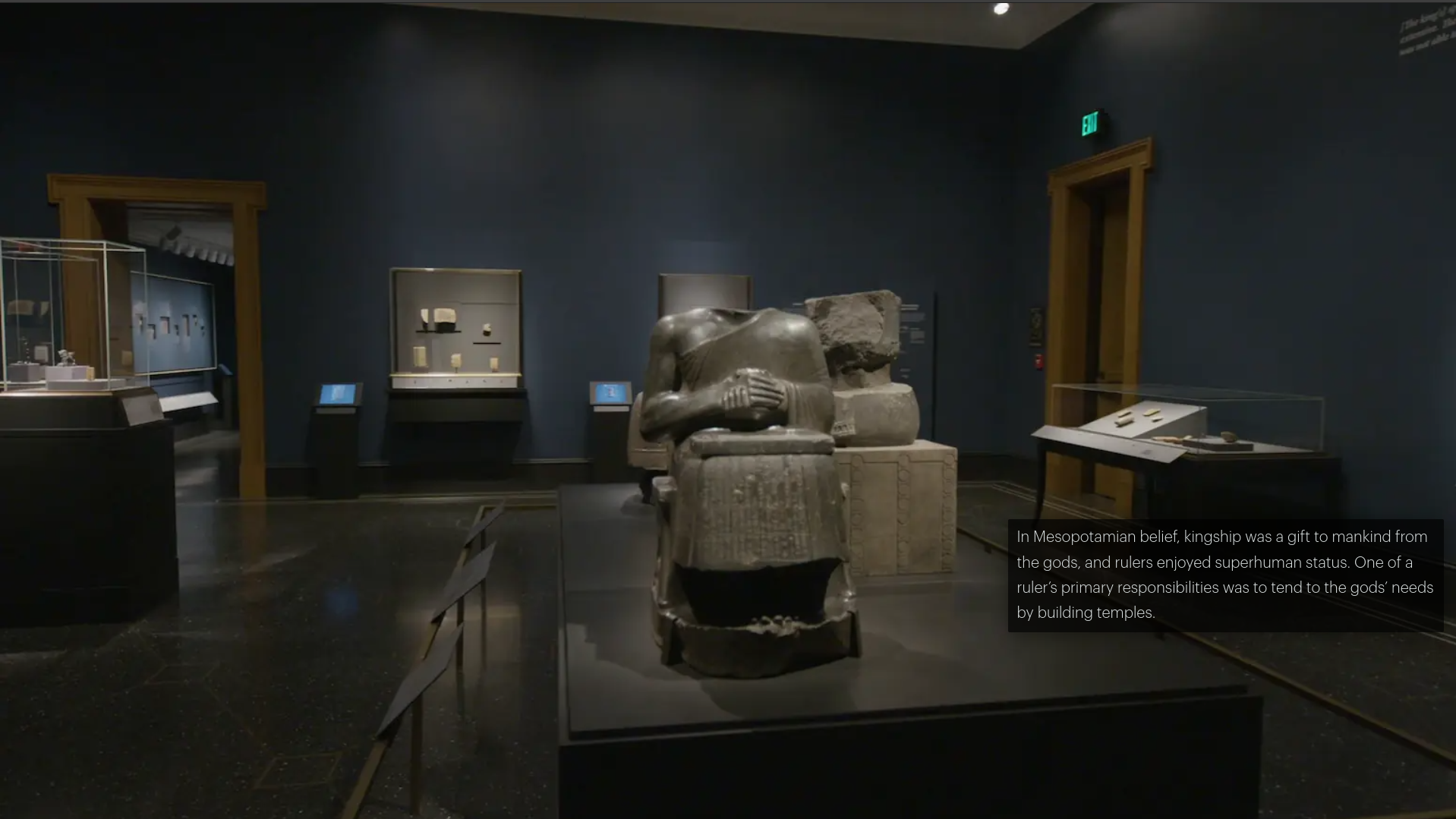

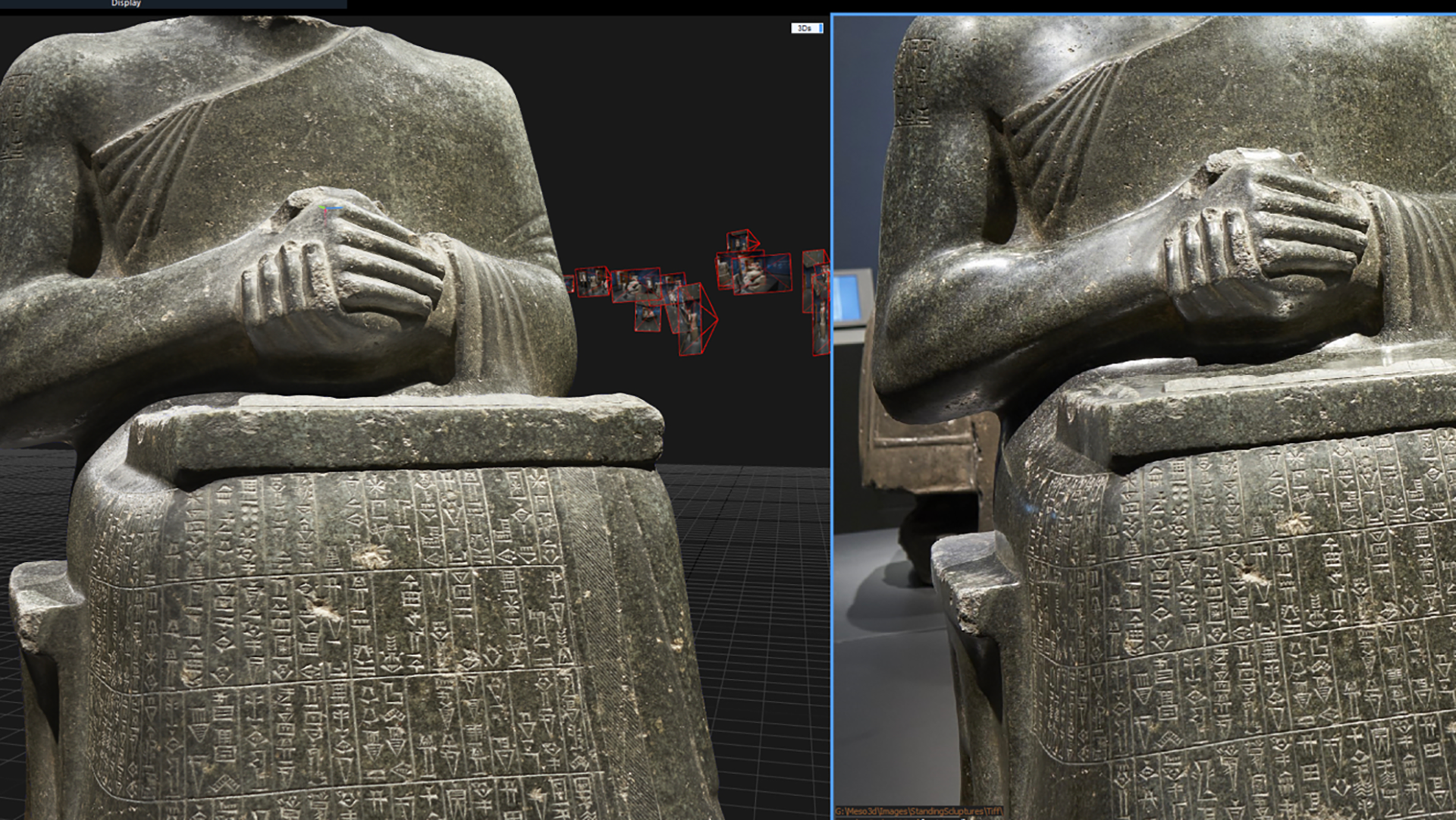

A: Getty’s first approach was to capture the entire exhibition in 3D. They modeled the space in LIDAR and Structured Light scanning, then wrapped the model in photogrammetry image data. But rather than recreate the entire exhibition, Getty determined it would be more compelling to highlight key objects, as it enabled a much closer look at fine details. The resulting object renders were gorgeous, and informed Getty’s motion references.

Q: What influenced your chosen technical approach, and how did it go beyond past methods?

A: To maximize visual fidelity while minimizing file size, Final Form developed a custom image processing toolchain to process Getty’s 3D renders and videos into image sequences optimized to achieve fast initial loading times and high performance, even on limited bandwidth. Static image sequences allowed for a smooth, high-performing experience and greater flexibility while iterating. Crossfades, for example, could now be achieved with code.When did you experience a breakthrough or an "a-ha" moment during this project?

Early on, Final Form was impressed by AVIF, a new optimized image file format that had just been released and was notably being put to use by Netflix. Compressing image sequences with AVIF routinely gave Final Form a 2:1 savings in file size, and in some cases as much as 15:1, while achieving the same fidelity as JPG. After seeing these results, Final Form targeted AVIF as their top preference for serving images to visitors.