Very Big Things

Dan

Dan

Marino

Foundation -

ViTA

Project

Employment /

Nominee

Very Big Things

Employment

Nominee

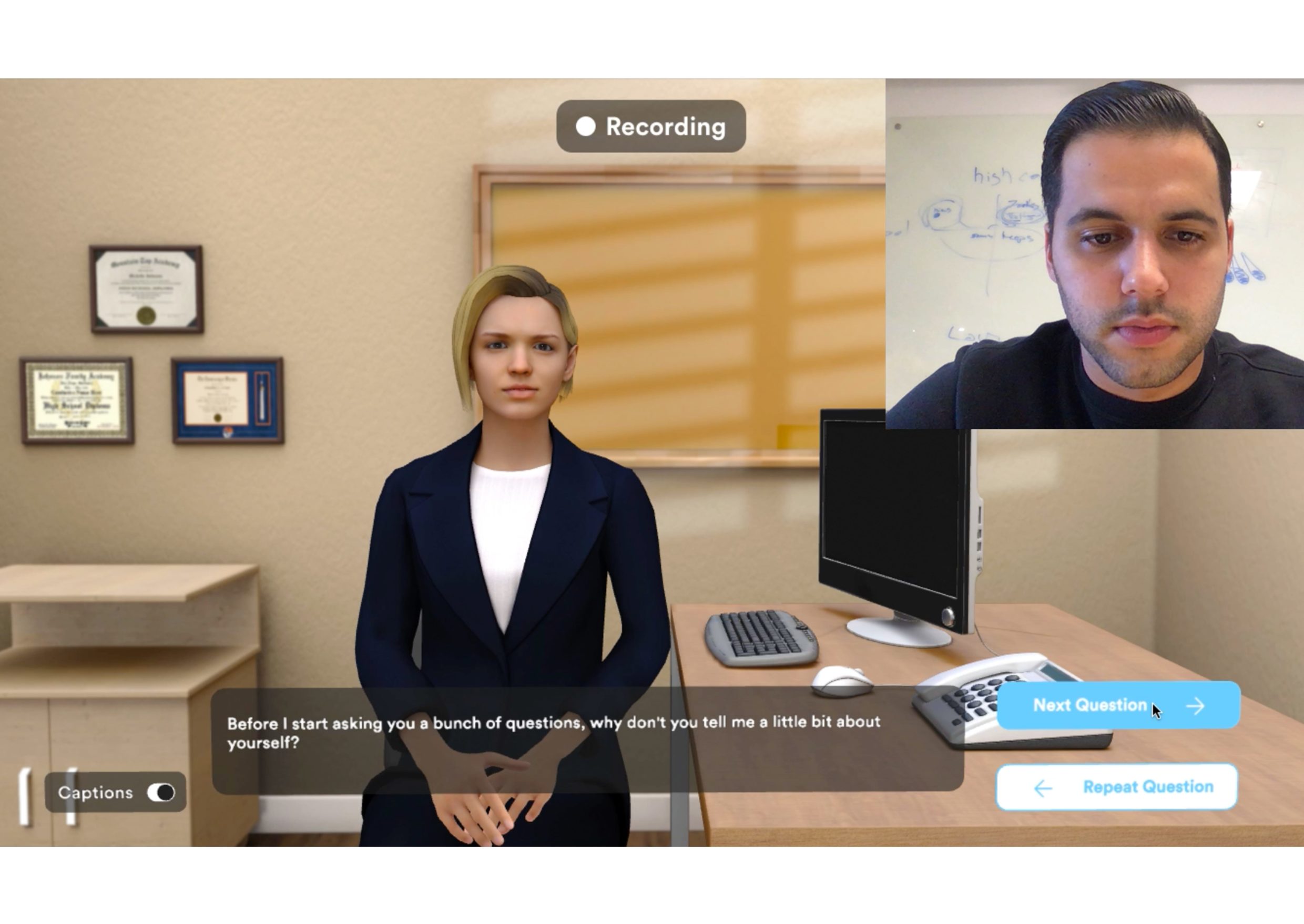

For many people with developmental disabilities or autism, the job interview is the biggest obstacle to employment. This web-based virtual-reality interview training platform allows clients to practice interviewing as many times as they want to, from the comfort of their own homes.- Very Big Things Team

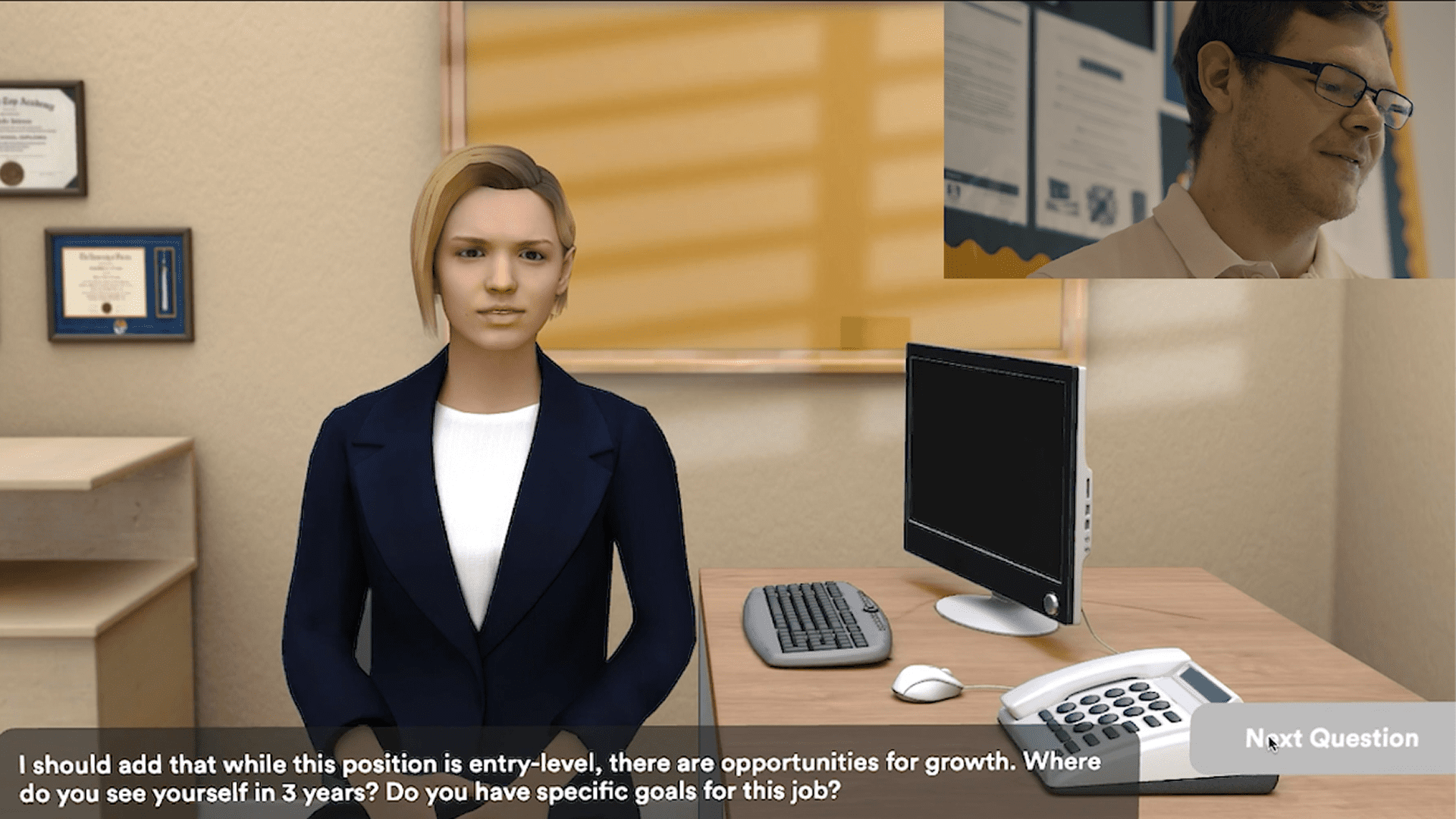

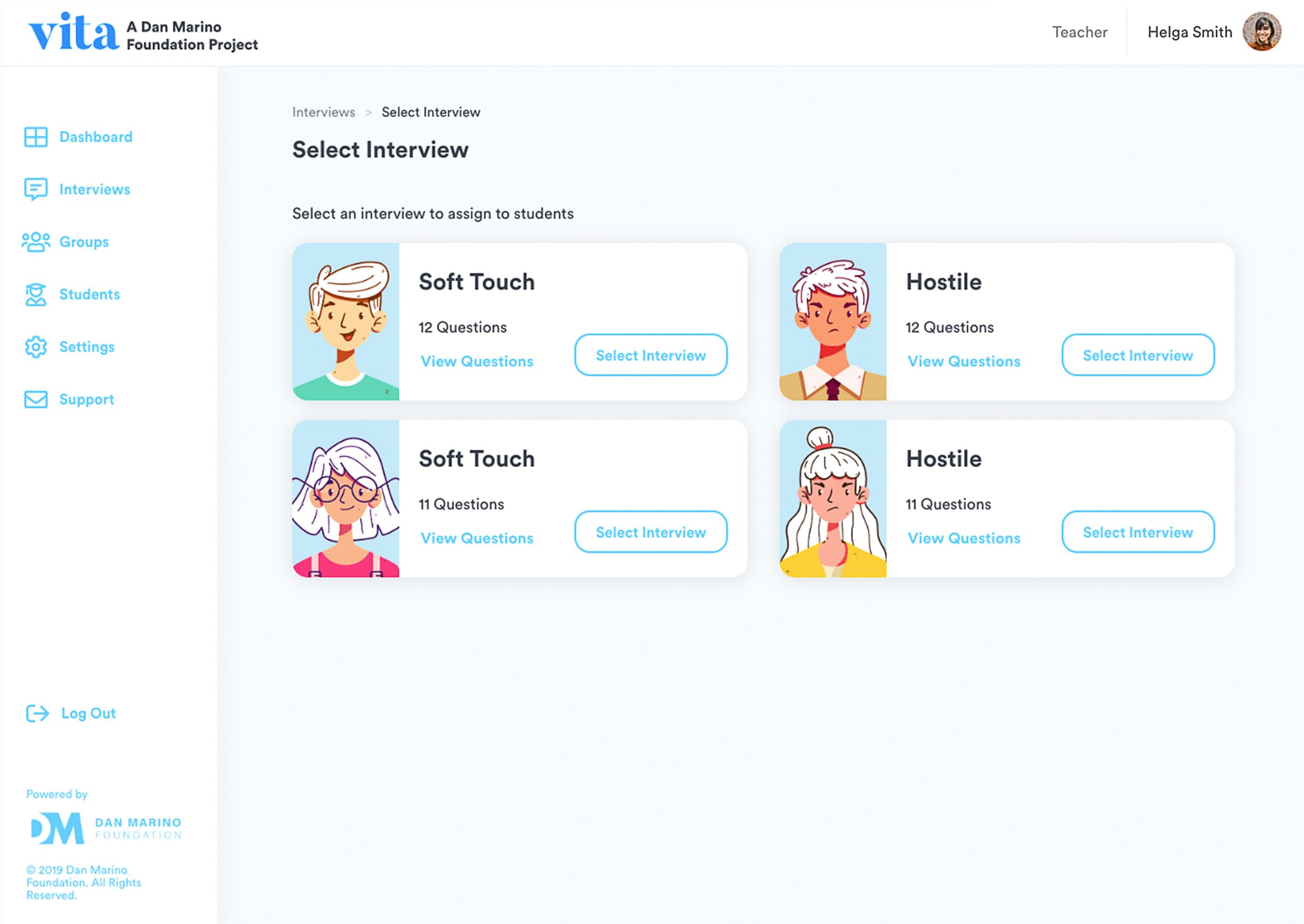

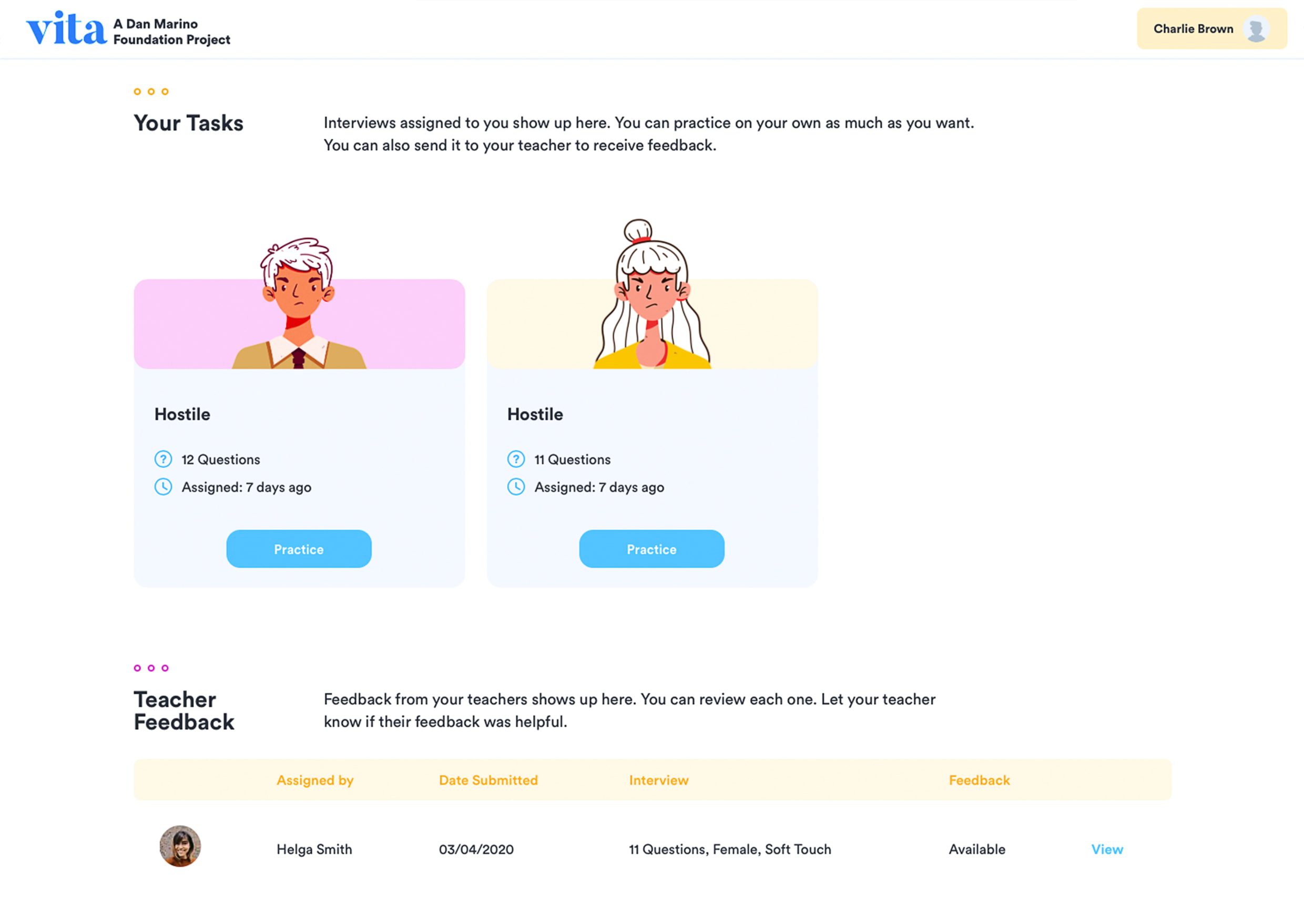

Q: Can you briefly describe your project and the concept behind it? A: The Dan Marino Foundation (DMF), a nonprofit dedicated to improving the lives of people with Autism Spectrum Disorder (ASD) and other developmental disabilities, wanted a platform that helps its clients prepare for interviews and other job-search-related communication. DMF wanted them to be able to use the platform anywhere, expanding access to more than just its constituents. We built a platform immersing users in a mock interview experience, including interactions with highly realistic digital interviewers whose dispositions can be set to easy, moderate, or tough. Users can practice interviewing, social interactions during interviews and other job search-related communication.

Q: Talk about your initial prototypes. How did those ideas change throughout design and execution? A: There were several archetypes we were solving for, not only those in the ASD community. We encouraged the creation of as many concepts as possible. We block framed and wireframed quickly before considering UI, colors, or typography and created prototypes to validate the best ideas, which evolved as new data presented itself. We based the mood board on both the existing DMF standards and rand our newly created visuals. The simulations separately as they needed to emulate real-world interviews to desensitize social interactions. We were selective with colors, in-room objects, model mannerisms, and other elements, to make the simulation separate for the platform.

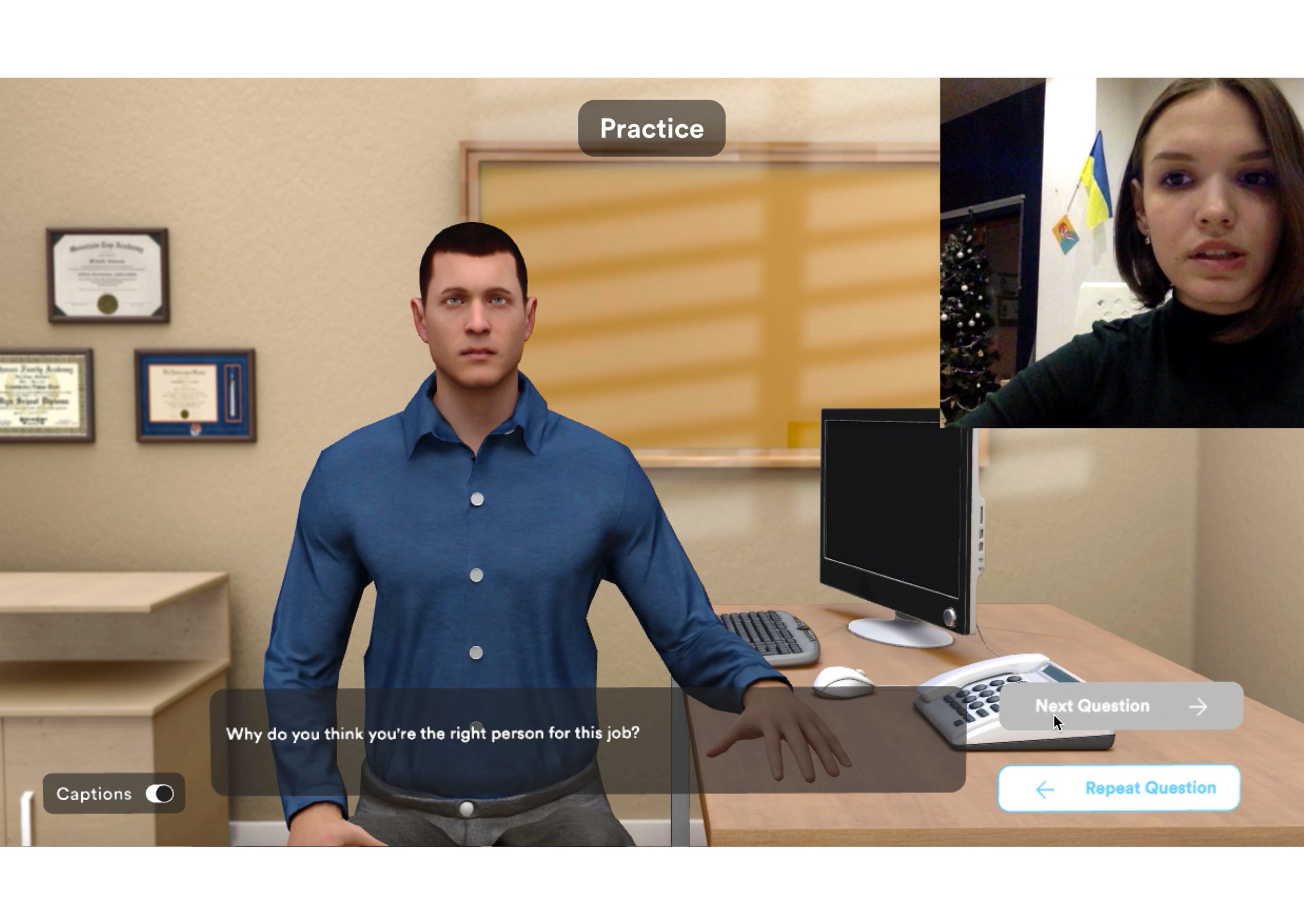

Q: What influenced your chosen technical approach, and how did it go beyond past methods? A: The engineering and design was heavily influenced by making a product that worked for this specific market. For many people with developmental disabilities or autism, the job interview is the biggest obstacle to employment. This web-based virtual-reality interview training platform allows clients to practice interviewing as many times as they want to, from the comfort of their own homes. This not only builds their confidence in interviewing, but also builds key interpersonal communication skills, such as the ability to make eye contact and project expected mannerisms.

Q: What web technologies, tools, or resources did you use to develop this? A: We accomplished this engineering breakthrough through cutting-edge graphics libraries, advanced engineering and attention to the user experience. We used Three.js, a cross-browser JavaScript library, to create 3D computer graphics. We also used custom 3D frameworks for webGL, creating skeletons for models inside of the web browser and animating them with lifelike movements such as blinks and looking around, as well as effects such as subsurface scattering, which mimics the natural appearance of skin – like light passing through a candle – and of other light-based visuals, including soft shadows.

What breakthrough or “a-ha” moment did you experience when concepting or executing this project?

Our a-ha moment with this project came when we figured out how we were going to make the interactions with these avatars as realistic as possible. We began with creating a 3D model of the interviewer and the environment, including the mesh and textures, gesture animations and lighting applications to soften both models’ looks. Four interviews were then created in the 3D environment, questions were added and synchronized the models’ voices and gestures. Finally, we developed the web service, integrating the 3D application inside the web application, creating user roles and management features, and creating and testing interview recording and reviewing.

Q: How did you balance your creative and technical capabilities with the client’s brand? A: The Dan Marino Foundation has earned the trust of the ASD community as well that of those with other developmental disabilities. Our challenge was to keep a common thread to their original brand while delivering a brand new digital experience altogether. We used the whimsical feeling of the DMF logo mark, and colors as our compass for the brand. Our team created custom illustrations and chose related colors for the platform that expanded on the DMF brand. In doing this, we were able to deliver a brand new experience without losing the trust DMF has already garnered.

Q: How did the final product defy your expectations? A: The final product was exactly what we hoped it would be: a fantastic user experience backed by ground-breaking technology. We successfully prepared mock job interviews through a simulation that began with an avatar interviewer that’s friendly and easy to interact with. The experience then progresses through difficult personalities, social interactions and situations. We created an environment that would be lower-stress, with design, color choices, typography and text designed for maximum usability and accessibility. It also had to have lifelike as possible in order to give users confidence and skills for communicating with humans in real-life situations.

Q: Why is this an exciting time to create new digital experiences? How does your team fit into this? A: We couldn’t be more excited for what is to come and the opportunities we’ll be presented with to create absurdly great digital products. We’re in a very interesting time right now where we’re seeing digital transformation across a variety of industries. We’re seeing how much our expertise is needed in today’s market, and we look forward to creating digital products that make a strong impact in sectors such as education, healthcare, and anything tele related.